Suppose you are a Data Scientist who has solved many Data problems. Many of them were probably solved by creating models from readily, freely available data or maybe payed to the organization who owned it.

What if you came across a problem, where the data (and labels) are not available. Depending on the specific business requirement, you will have a talk with the Data engineering team and set up Data acquisition and Data Labeling.

And often this process becomes expensive depending on the domain (medical/industrial) and the amount of data that is required to be labeled.

For a few suitable scenarios, you can escape from labeling your entire data and get away with labeling a few specific examples and propagating them to the entire dataset. This saves money and time.

That's what Active Learning is.

Active learning is the process of prioritising the data which needs to be labelled in order to have the highest impact to training a supervised model.

But you may ask, why specific examples? Why not choose to label a random sample from the acquired data?

The problem lies with the quality of the labeled data.

Machine learning programs are decidedly effective at spotting patterns, associations, and rare occurrences in a pool of data. With randomly labeled data, the quality suffers and it becomes impossible for ML models to learn these complex patterns.

What we want the model to do is to grab essential complex properties about the dataset just enough that the performance becomes modest. Our goal should be to create a dataset that includes variations in each of our classes.

And get predictions from this modest model to get a much larger dataset training on which we'll have an even more reliable and performing model.

So for example, if we have new 10,000 data points, containing examples for 10 different classes, and we can label only 1000 of them, that's the budge (or if that number is suitable to create a modest performing model). We'll create a 1000 data points labeled dataset with the same amount of examples for each class and represent each variation possible in that class. We'll then label additional data after evaluating the generated model.

Let's go over a few common techniques of Active Learning.

All active learning techniques rely on us leveraging some number of examples with ground truth, and accurate labels. What they differ in the way they use these accurately labeled data points to identify and label unknown data points.

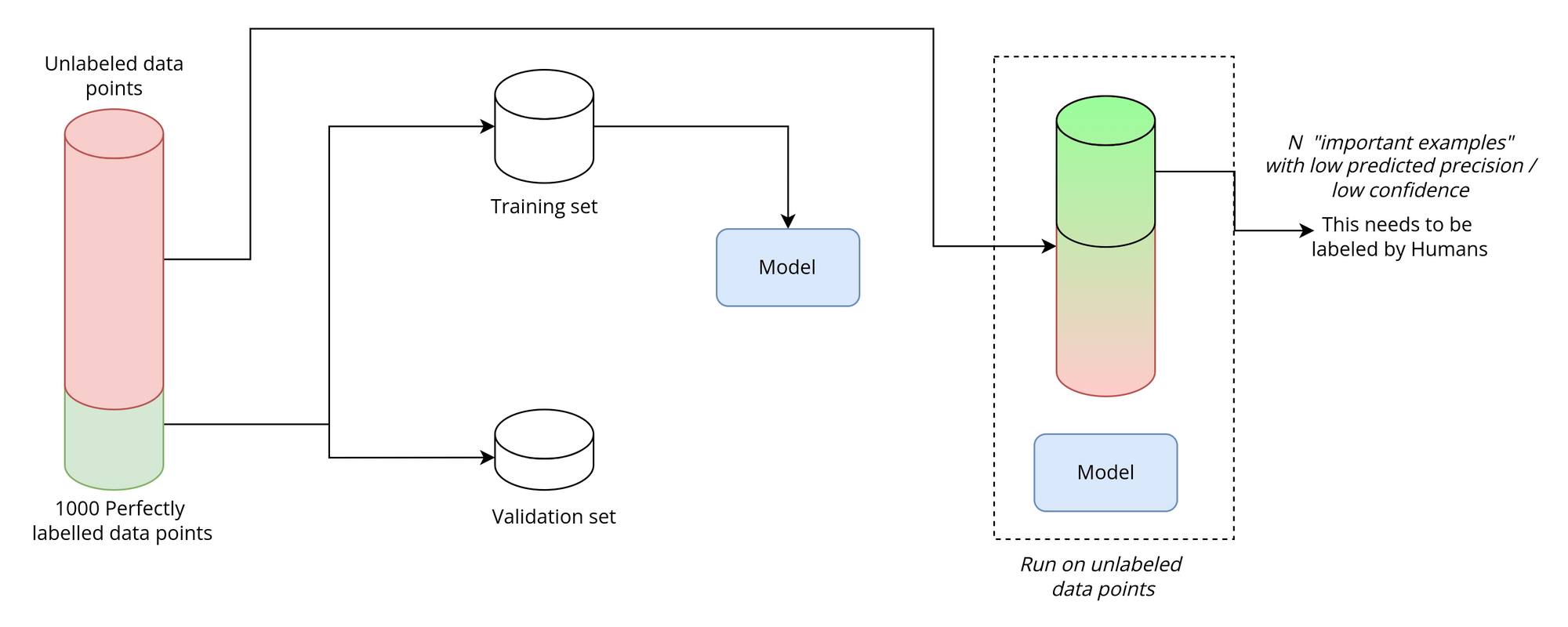

Pool-Based Sampling

Pool-based sampling is probably the most common technique in active learning, despite being memory intensive.

In Pool-based sampling we identify the "information usefulness" of all given training examples, and select the top N examples for training our model.

So for example, if we already have 1000 perfectly labelled data points, we can train on 800 labeled examples and validate on remaining 200 examples. The model so generated will now enable us to identify examples out of the rest 9000 unlabeled examples that going to be most helpful to improving performance. These examples will have lowest predicted precision.

We'll select top N examples out of these lowest predicted precision examples, and label them.

This new "important" data points of size N along with our previous 1000 will pave path to create a much more effective model.

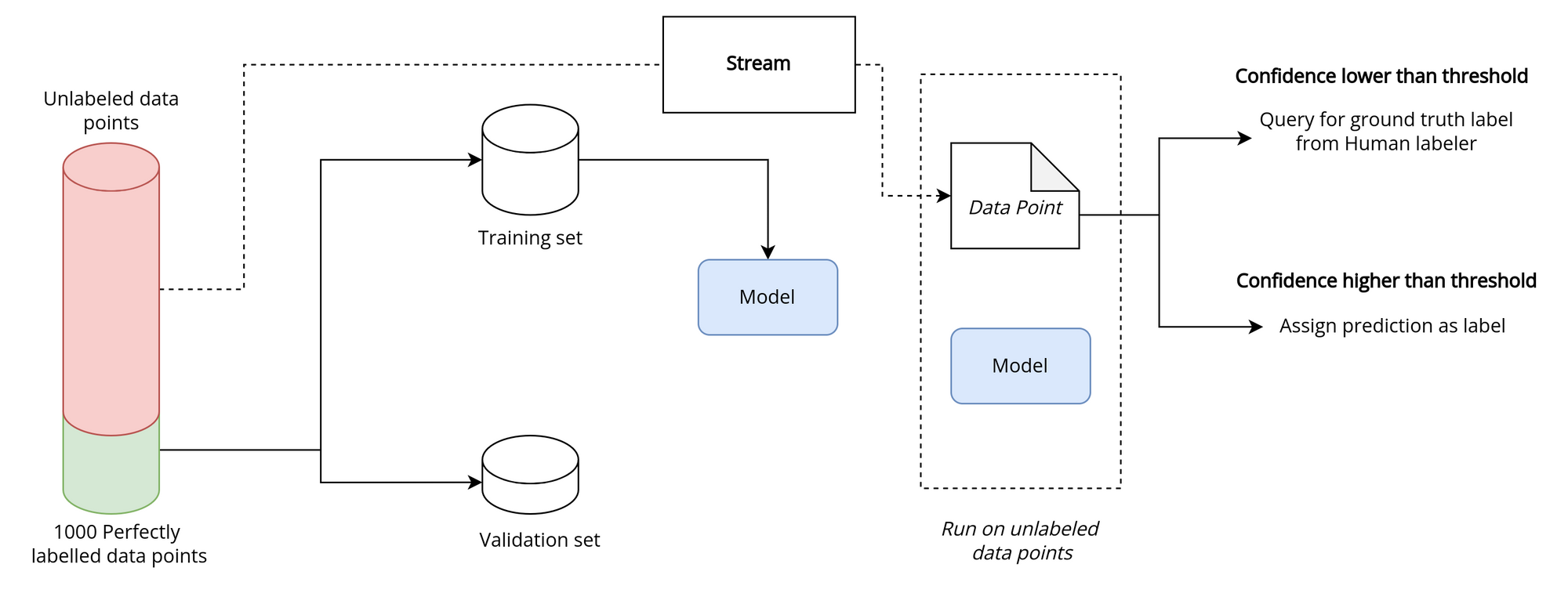

Stream-Based Selective Sampling

Another active learning technique which is intuitive to understand.

In this technique, as the model is training, the active learning system determines whether to query for the perfect ground truth label or assign the model-predicted label based on some threshold set by us.

Unlike pool based it's not memory intensive but exhaustive search since each example is required to be examined one-by-one. This can easily exhaust our 1000 limit budget, if model don't get enough "important" examples soon enough and keep querying for true label.

Let's again take an example. We have a a moderate performing model trained using 1000 perfectly labeled data point and we want to increase performance on top of this. For that, we consider labeling 1000 more examples using stream-based selective sampling. We would go through the remaining 9,000 examples in our dataset one-by-one, ask model to evaluate. If the confidence is lower than the set threshold, ask labeller to assign label to it; otherwise leave the prediction output as generated label.

Evidently, we'll not go through all 9,000 examples since our labeling budget is capped at 1000 examples. So, the resulting dataset might not contain relatively very important data points as compared to Pool-based sampling.

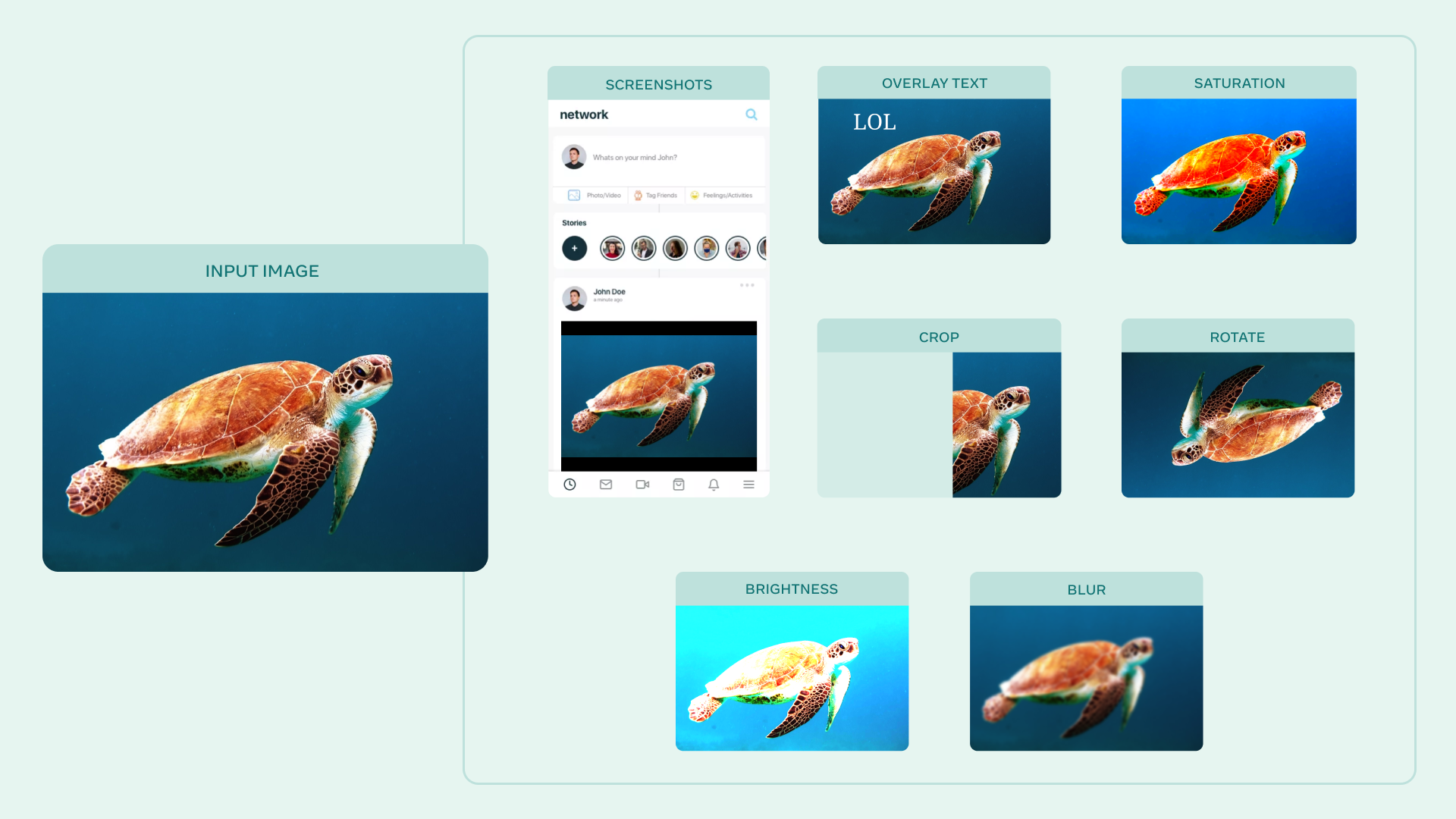

Membership Query Synthesis

This is an active learning technique wherein we create new training examples based on already available data points. This might sounds spurious, but is actually plausible.

Analyzing the trend in the data and then careful use of regression or GANs can expand our starting training dataset. Data Augmentation is another technique often used in balancing datasets and regularizing can be used for generating new data points.

This technique is less limiting as compared to above two methods but requires a careful analysis of dataset and variations possible in the examples. If some variations in subset of examples are missing, then effective data augmentation technique will be required to fulfill that missing space.

For example, if we are building image classification model for sea creatures, it is possible that lighting conditions to be not so good and images to be often in lower light conditions. We can use brightness augmentation for this purpose. Or if images are sourced from screenshots in production, we can augment image to be a part of a fake screenshot, and so on.

Popular library for these kind of augmentations are for example –

The 1000 labeling budget becomes less of a concern with this technique, as no actual human labeler is required. But you can think of this budget getting spend whenever we synthesize a new example. And for sure you are free to exhaust your labeling budget in this technique.

That's all for today.

Thi is Anurag Dhadse, signing off