Can we break a machine learning problem can be broken into a series of ML problems?

I wonder you never though of that. Sometimes, you can do, but how and when is what is going to be the theme of this article.

So, let's understand the problem first.

Say, we want to train a model for a anomality or for a task that requires it to predict both usual and unusual activity. The model unless done some preprocessing or rebalancing is done on the data, the model will not learn the unusual activity because it is rare. It the unusual activity is also associated with abnormal values, then trainability suffers.

Let's suppose we are trying to train a model to predict the likelihood that a customer will return an item that they have purchased. If we simply train a binary classifier model, the reseller's return behavior is hard to be captured because in comparison to returns made, there are millions of transactions by retail buyers. We might not know at the time of purchase, if the purchase is made by a retail buyer or a reseller. However, from other martketplaces, we have identified when items bought from us are subsequently being resold.

One way to solve this could be to overweight the reseller instances when training the model. But then we won't be able to get the more common retail buyer use case as correct as possible, trading off accuracy on retail buyer and instead optimizing just for reseller use case.

The best way might be to use Cascade design pattern, breaking the whole problem into 3 distinct problems:

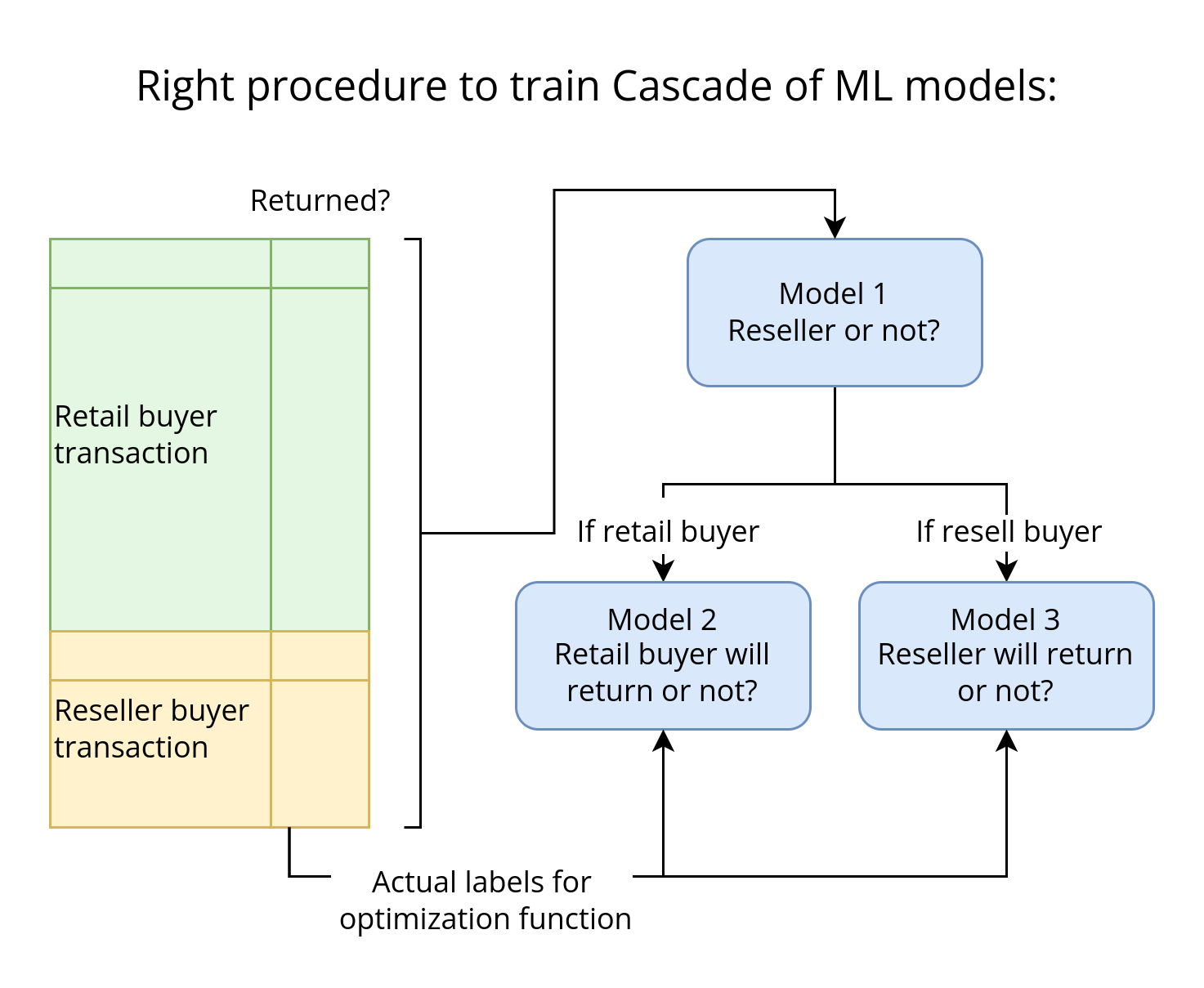

- Predict whether a specific transaction is by a reseller – reseller or not?

- Training one model on sales to retail buyers – retail buyer will return or not?

- Training the second model on sales to resellers – reseller will return or not?

Combine the output of the three separate models to predict the return likelihood for every item purchased and the probability the transaction is by a reseller.

This allows for the probability of different decisions on items likely to be returned depending on the type of buyer and ensures that the models in step 2 and 3 are as accurate as possible.

In addition to that, in the first step, we can use rebalancing to address the imbalanced distribution of transactions from retail buyers and resellers.

But, how do we do this?

Solution

Any machine learning problem where the output of one model is an input to the following model or determines the selection of subsequent models is called a cascade.

For example, a machine learning problem that sometimes involves unusual circumstances can be solved by treating it as a cascade of four machine learning problems:

- A classification model to identify the circumstance

- One model trained on unusual circumstances

- A separate model trained on typical circumstances

- A model to combine the output of the two separate models because the output is a probabilistic combination of the two outputs

This might look very similar to an Ensemble of models but is actually different because of the special experiment design required when doing a cascade.

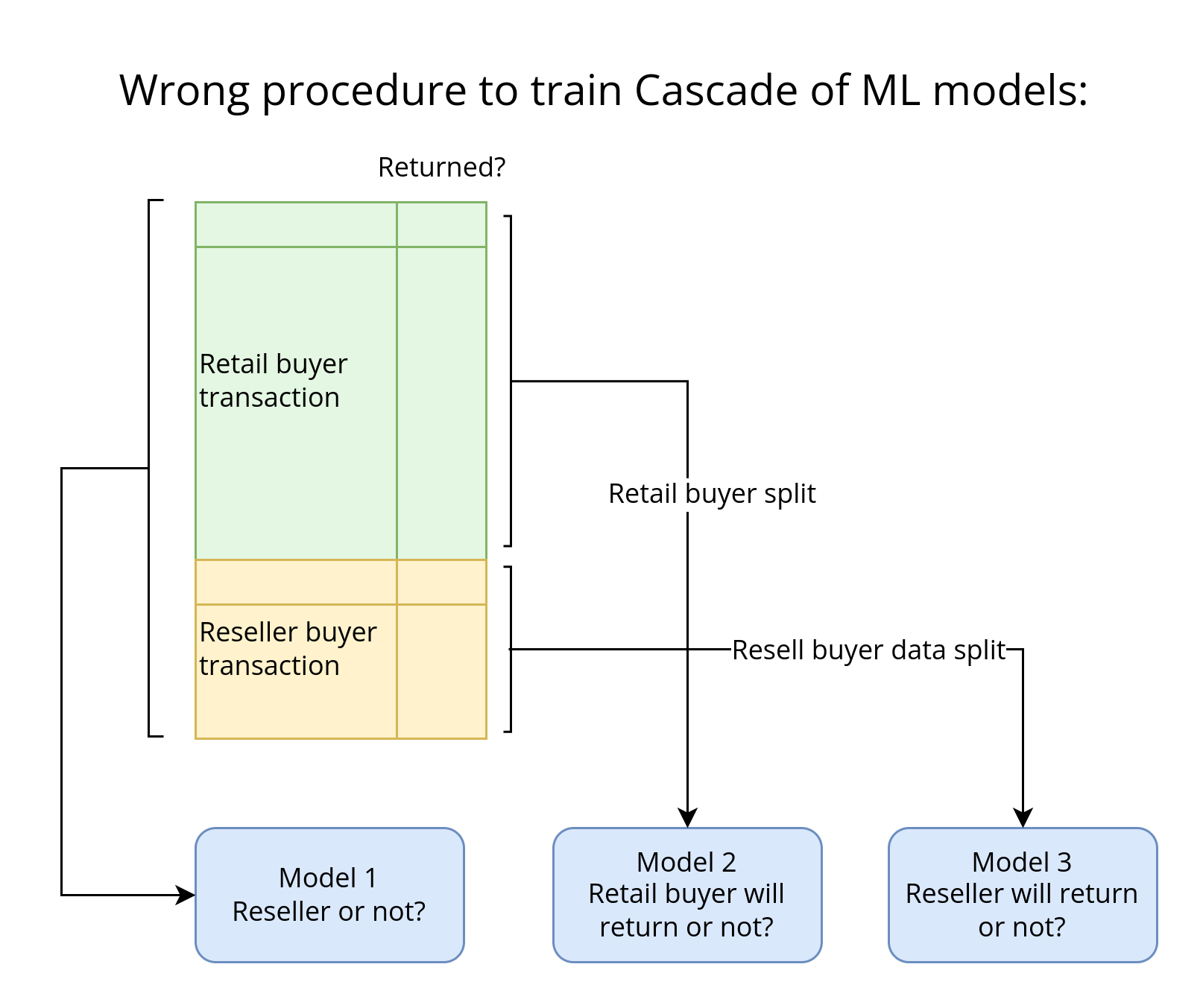

Indeed, the subsequent models after step 1 are not supposed to be trained on the actual split of training data separate from the model in step 1 but instead in a union. The subsequent models instead are required to be trained with the inputs of the first model in the cascade and ground truth labels as guidance to the optimization function.

So, the predictions of the first model are used to create the training dataset for the next models.

Also, rather than training the model individually, it is better to automate the entire workflow, by using workflow automation frameworks such as Kubeflow Pipelines, TFX, and many others.

Trade-Offs and Alternatives

Cascade is not necessarily the best practice. It adds quite a bit of complexity and can be hard to debug in case of bad data and hard to maintain. Remember, if the data changes all models in the cascade would be required to be retrained.

Also, avoid having, as in the Cascade pattern, multiple machine learning models in the same pipeline. Try to limit a pipeline to a single machine learning problem.

Deterministic inputs

Splitting an ML problem is usually a bad idea since an ML model can/should learn combinations of multiple factors. For e.g.,

- If a condition can be known deterministically from the input (article is from a news website, vs from an individual), we should just add the condition as one more input tot the model.

- If the condition involves extrema in just one input (some customers who live nearby versus far away, with the meaning of near/far needing to be learned from the data), we can use Mixed Input Representation to handle it.

The Cascade design pattern addresses an unusual scenario for which we do not have a categorical input, and for which extreme values need to be learned from multiple inputs.

Single Model

Problems which does seem to be simple enough that a large/medium size ML model will be sufficient should stay away from using the Cascade design pattern. These problems imply patterns and combinations which can be implied from the data itself and can be learned by the model.

Internal Consistency

The Cascade is needed when we need to maintain internal consistency among the predictions of multiple models.

Suppose, we are training the model to predict a customer's propensity to buy is to make a discounted offer. Whether or not we make a discounted offer, and the amount of discount will very often depend on whether this customer is comparison shopping or not. Given this, we need internal consistency between the two models (the model for comparison shoppers and the model for propensity to buy). In this case, the Cascade design pattern might be needed.

Pre-trained Model

The cascade is also needed when we wish to reuse the output of a pre-trained model as an input into our model.

Say, we want to train a model that can convert a page full of mathematics formulas into LaTeX. We might have an OCR model that can do this but only if given a photo of a formula and not a page filled with formulas.

We can do a cascade and train a YOLO model to detect the individual formula on a page and then forward this output to our OCR model. It is critical that we recognize that the YOLO model will have errors, so we should not train the OCR model with a perfect training set of photos and corresponding LaTeX formulas. Instead, we should train the model on the actual output of the YOLO.

This is a common scenario where when using a pre-trained model as the first step of a pipeline is using an object-detection model followed by a fine grained image classification model. In that case, Cascade is recommended so that the entire pipeline can be retrained whenever the object-detection model is updated.

Reframing instead of Cascade

Suppose, we wish to predict hourly sales amounts. Most of the time, we'll serve retail buyers but once in a while, we'll have a wholesale buyer.

Reframing the regression problem to be a classification problem of a range of different sales amounts might be a better approach, instead of trying to get the retail versus wholesale classification correct.

Regression in rare situations

The Cascade design pattern can be helpful when carrying out regression when some values are much more common than others. For example, if we want to predict the amount of rainfall from a satellite image. It might be the case that on 99% of the pixels, it doesn't rain. In such cases, we can:

- First, predict whether or not it is going to rain for each pixel.

- For pixels, where the model predicts rain is not likely, predict a rainfall amount of zero.

- Train a regression model to predict the rainfall amount on pixels where the model predicts that rain is likely.

That's all for today. Hope you learned something new.

This is Anurag Dhadse, signing off.