What is Keras?

Keras is a high-level Deep Learning API(Application Programming Interface) that allows us to easily build, train, evaluate, and execute all sorts of neural networks. What is does is abstract away the implementation of various Deep Learning libraries like TensorFlow, Microsoft Cognitive Toolkit(CNTK), and Theano.

What is TensorFlow?

It's a Deep Learning Library and along with that is provides a large set of tools for numerical computation, and large-scale Machine Learning. It also provide TensorBoard for visualization of model, TensorFlow Extended (TFX) to productionize TensorFlow projects, and much more.

Let's get started

To build neural networks in TensorFlow with Keras, TensorFlow offers it's own implementation of Keras. To use this let's import it.

import tensorflow as tf

from tensorflow import kerasKeras offers 3 different API to create a neural network based on the level of complexity of the model. These are:

- Sequential API

- Functional API

- Subclassing API

We'll go through them one by one.

Building a simple model using Keras Sequential API

This API is generally preferred for very simple use cases or sometime even for baseline models. It stacks a linear stack of layers, one on top of other.

Here we create a classification MLP for MNIST dataset with two hidden layers.

model = keras.models.Sequential()

model.add(keras.layers.Flatten(input_shape=[28, 28]))

model.add(keras.layers.Dense(300, activation="relu"))

model.add(keras.layers.Dense(100, activation="relu"))

model.add(keras.layers.Dense(10, activation="softmax"))

Little insight here:

- First is a

Flattenlayer whose purpose is to convert each input into a 1D array. Intuitively it performsX.reshape(-1,1)whereXis or input. We also provided theinput_shapeto be 28x28 since MNIST dataset consist of 28x28 pixel images of numbers. - Then we stacked two

Denselayers, both with"relu"activation. - At last, we added a

Denseoutput layer with"softmax"activation as this model is for a classification task.

But there is a much more simple way to write this:

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation="relu"),

keras.layers.Dense(100, activation="relu"),

keras.layers.Dense(10, activation="softmax")

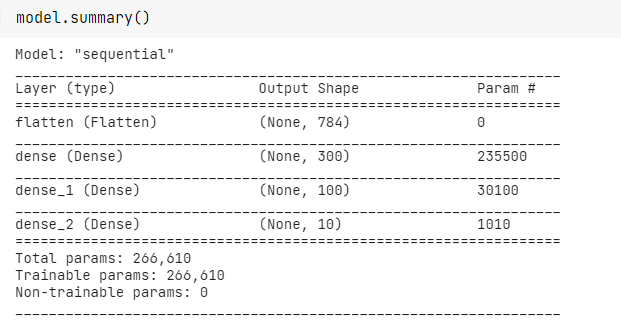

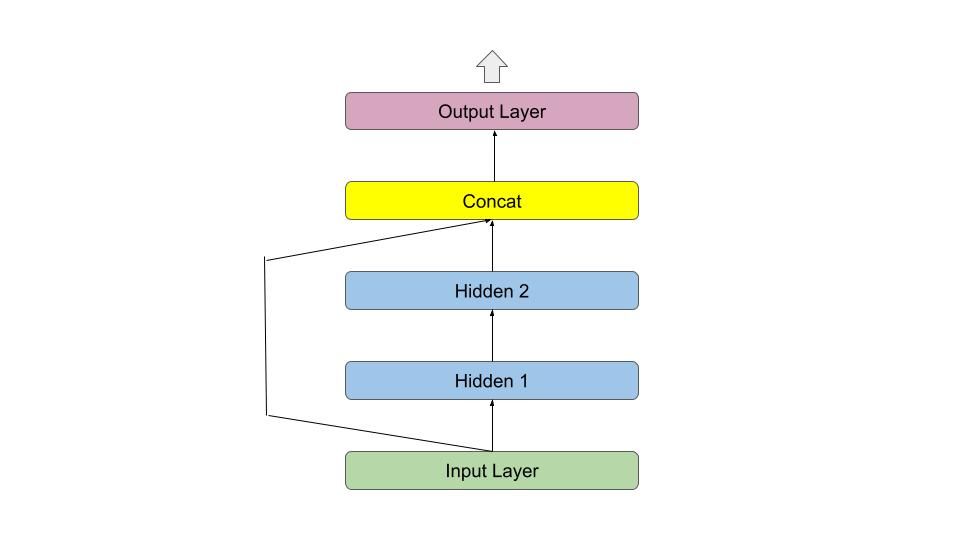

])Now we can call, model's summary() method to display model's all layers and parameters.

- The flatten layer, as said earlier flattens our 28x28 input to give an input shape of 784 neurons in first layer.

- The next layer, as per our code has 300 neurons. Each neuron having a connection with each neuron in previous layer (thus a

Denselayer) resulting in 300x784 = 235200 weights plus 300 biases (per each neuron in this layer) = 235500 trainable parameters. - And the same follows for all different layers in this NN.

Compiling the model

The compile() method of model specifies the loss function and the optimizer to use. We can also specify extra metrics we want too compute during training and evaluation.

model.compile(loss="sparse_categorical_crossentropy",

optimizer="sgd" # Stochastic gradient Descent

metrics=["accuracy"])We used "sparse_categorical_crossentropy" since for each instance, there is just a single target class index (0-9) and the classes are mutually exclusive.

If we had one target probability per instance, (like [0., 0., 0., 1., 0., 0., 0., 0., 0., 0.] as class 3) we would have used "categorical_crossentropy".

For Binary classification task we would have use "binary_crossentropy".

Training a model

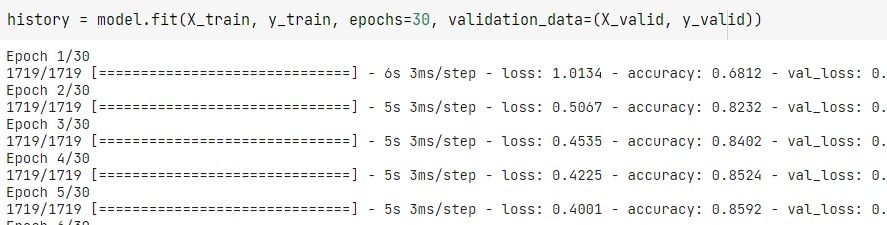

For training we call the fit() method, passing the input features, labels and the number of epochs. We can also pass the validation data to measure the validation accuracy as the model gets trained.

This method returns a history object which we can then use to visualize using Tensorboard or using a simple script.

import pandas as pd

import seaborn as sns

sns.set_theme()

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

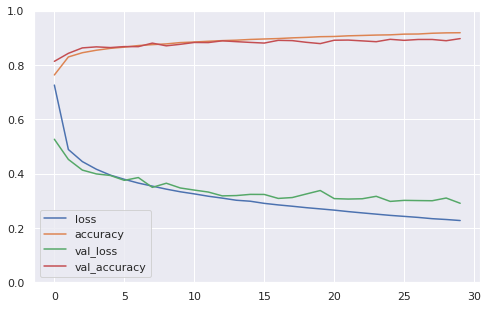

Building Complex models Using the Functional API

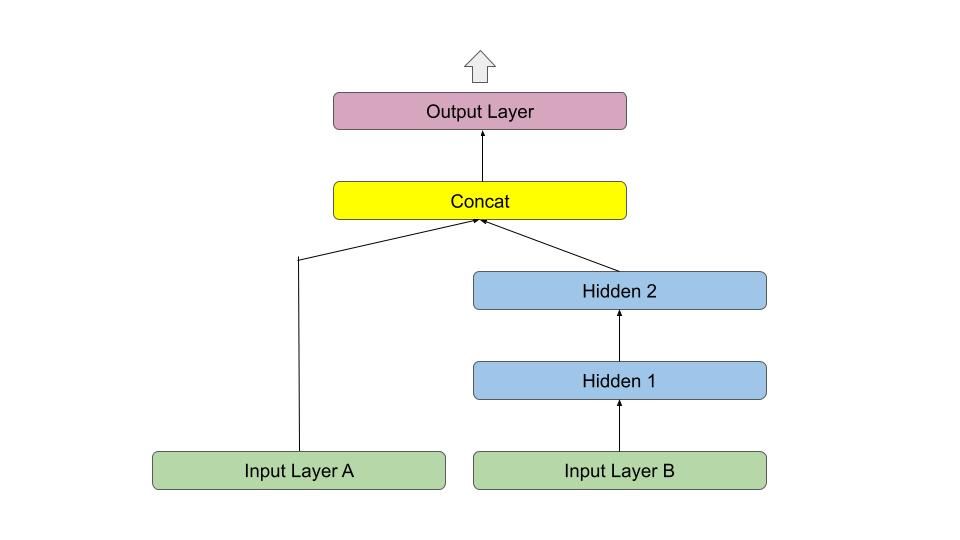

This API is mostly used for building nonsequential neural networks, i.e., where we are going to have multiple inputs, multiple outputs or even passing activations or inputs directly to other layers in the network.

One such example is Wide & Deep neural network. This network connects all or part of inputs directly to the output layer.

This helps in learning both deep patterns (using the deep path) and simple rules (through the short path).

In Keras we can build it like this using functional API for California Housing Prediction problem:

input_ = keras.layers.Input(shape=X_train.shape[1:])

hidden1 = keras.layers.Dense(30, activation="relu")(input_)

hidden2 = keras.layer.Dense(30, activation="relu")(hidden1)

concat = keras.layers.Concatenate()([input_, hidden2])

output = keras.layers.Dense(1)(concat)

model = keras.Model(inputs=[input_], output=[output])

- Notice how we created an

Inputobject which specify the input shape and then this is passed on toDenselayer as input right after it is created. - This creates a hidden layer object

hidden1which is further passed ontohidden2. - Then we needed to concatenate the input and the output of Dense layers. Thus we used

Concatenatelayer and passedinput_andhidden2objects. - At last, we told Keras what are inputs and outputs. Thus creating our model object.

But, what if you wanted multiple inputs or multiple outputs. Using Functional API you can do this too. Check this:

input_A = keras.layers.Input(shape=[5], name="wide_input")

input_B = keras.layers.Input(shape=[6], name="deep_input")

hidden1 = keras.layers.Dense(30, activation="relu")(input_A)

hiddden2 = keras.layers.Dense(30, activation="relu")(hidden1)

concat = keras.layers.Concatenate()([input_A, hidden2])

output = keras.layer.Dense(1, name="output")(concat)

model = keras.Model(inputs=[input_A, input_B], outputs=[output])This let's us send a subset of the features through the wide path and a different subset of features through the deep path.

One thing to note is, now we need to call the fit() method providing the set of inputs instead of single input. Same is applied for labels if we are having multiple outputs in our network and for validation data.

model.compile(loss="mse", optimizer=keras.optimizers.SGD(lr=1e-3))

# Splitting the train, test and validation data

# for different sets of featrues

X_train_A, X_train_B = X_tain[:, :5], X_train[:, 2:]

X_valid_A, X_valid_B = X_valid[:, :5], X_valid[:, 2:]

X_test_A, X_test_B = X_test[:, :5], X_test[:, 2:]

X_new_A, X_new_B = X_test_A[:3], x_test_b[:3] # suppose an unknown sample

# Providing a tuple of training and validation data

history = model.fit((X_train_A, X_train_B), y_train,

epochs=20,

validation_data=((X_valid_A, X_valid_B), y_valid))

# Evaluation and prediction's inputs are also splits

mse_test = model.evaluate((X_test_A, X_test_B), y_test)

y_pred = model.predict((X_new_A, X_new_B))To avoid wrong ordering of input when we a have a many inputs providing a dictionary of inputs with key being the name of input might be helpful.

history = model.fit({"wide_input": X_train_A,

"deep_input": X_train_B},

y_train,

epochs=20,

validation_data=({"wide_input": X_valid_A,

"deep_input": X_valid_B"}, y_valid))

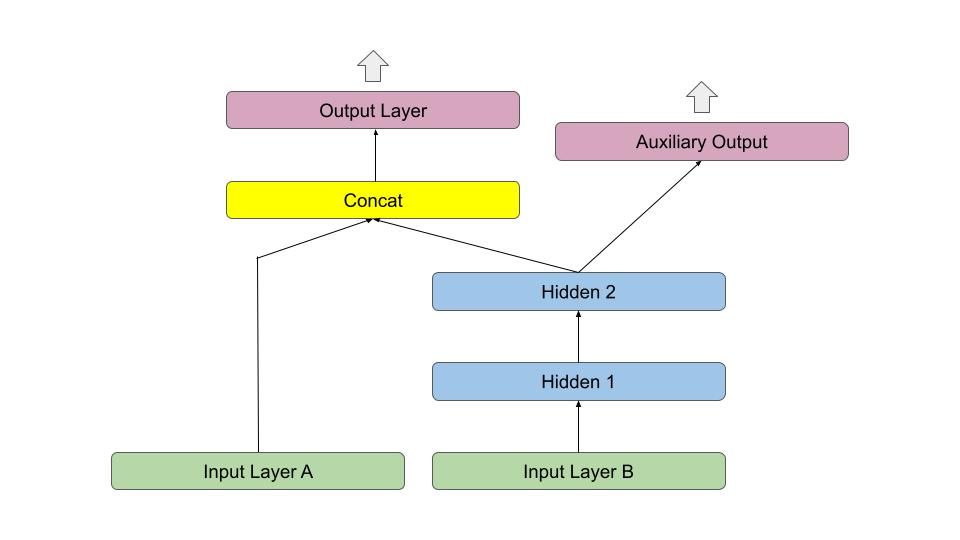

The same way we can achieve multiple outputs for this model:

[...] # Same as above, up to main output layer

output = keras.layers.Dense(1, name="main_output")(concat)

aux_output = keras.layers.Dense(1, name="aux_output")(hidden2)

model = keras.Model(inputs=[input_A, input_B],

outputs=[output, aux_output])Each output requires its own loss function. That's why when we compile our model we pass a list of losses. It might also be helpful to provide a loss_weights list to give a more weightage to one of the output.

model.compile(loss=["mse", "mse"], loss_weights=[0.9, 0.1], optimizer="sgd")For training, as said earlier, we need to provide labels for each output. And this completely depends on for what task the model is getting build.

During evaluation of the model, Keras return total as well all other other output losses.

# Here we passed the same labels y_test

# as the auxilary output is just for regularization

total_loss, main_loss, aux_loss = model.evaluate([X_test_A, X_test_B],

[y_test, y_test])The model's predict() method then also return outputs of various output layers.

y_pred_main, y_pred_aux = model.predict([X_new_A, X_new_B])Using Subclassing API to Build Dynamic Models

In some cases, the model may involve loops, varying shapes, conditional branching, and other dynamic constructs. Keras Subclassing API is just for that purpose and for that we need to :

- Subclass

keras.Modelclass. - Create layers in the constructor.

- Perform computation of these layers in the

call()method.

Let's build the same Wide and Deep model using Subclassing API

class WideAndDeepModel(keras.Model):

def __init__(self, units=30, activation="relu", **kwargs):

super().__init__(**kwargs) # handles standard aruments ( name, etc.)

self.hidden1 = keras.layers.Dense(units, activation=activation)

self.hidden2 = keras.layers.Dense(units, activation=activation)

self.main_output = keras.layers.Dense(1)

self.aux_output = keras.layers.Dense(1)

def call(self, inputs):

input_A, input_B = inputs

hidden1 = self.hidden1(inputs_B)

hidden2 = self.hidden2(hidden1)

concat = keras.layers.Concatenate([input_A, hidden2])

main_ouput = self.main_ouput(concat)

aux_ouput = self.aux_ouput(hidden2)

return main_ouput, aux_output

model = WideAndDeepModel()Although this has downsides of Keras not being able to inspect it. So we cannot save or clone the model, summary() method also returns only a list of layers. Moreover Keras cannot check types and shapes ahead of time.

Also it is quite easy to make mistake, so unless you are really in need of the this flexibility, try to avoid this.

Keep Learning.