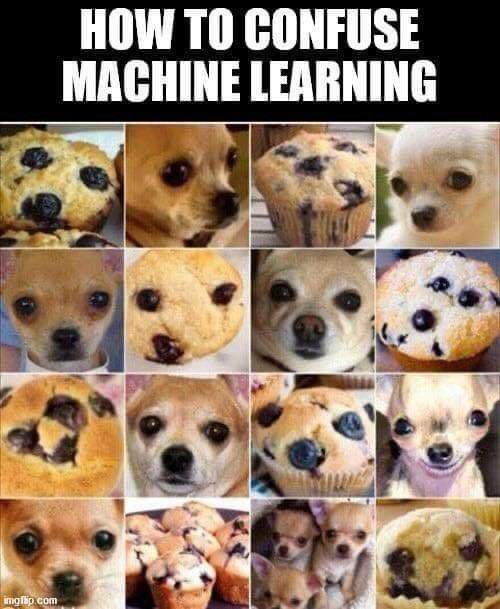

Let me begin this article by asking you a question. What do you see in the below picture(s)?

A cat or a dog?

Many classic ML literature begins with Linear Regression and then transitions to Logistic Regression for classification.

The simplest base case of classification being Binary Classification. Cat or Not Cat, Dog or not Dog, Cat or Dog. You get it. There is one a Positive Class and a Negative Class.

For academic literatures, it works fine. But when it comes to complex, real world problems, at times we might need more than just 2 classes.

Coming back to my original question. The answer is the first image is probably just an augmented image of dog to look like cat and the second image is of a cat.

For ML models these examples are really tricky and it's hard to find examples out in the wild.

So the thing is, in real world scenarios, in classification tasks, creating a Neutral class can be helpful.

For example instead of training a binary classifier for cat/dog, we can train a three-class classifier that outputs probabilities for Cat, Dog, or Both.

These output probabilities are meant to be disjoint, i.e., the classes themselves should not be overlapping. For example, if we take our previous example, the classes should not be Cat, Dog, and Chihuahua.

Let's say a labeller is tasked to give positive or negative label to the below text for a sentiment analysis dataset:

- "Brilliant effort guys! Loved your work" – Labeller will assign a "Positive" class

- "Totally dissatisfied with the esrvice." – Labeller will assign a "Negative" class.

- "Fine but I will expect a lot more in future."

In the last example multiple labeller can assign it to different classes, maybe Positive or Negative. The decision is arbitrary.

So, you see binary classifier on such dataset will lead to poor accuracy because the model will need to get the essentially arbitrary cases correct. Where in reality the labels are arbitrary for subset of data points; both could be true but because of the data labelling the accuracy suffers.

Solution

To solve this, it requires us to design the data collection procedure appropriately. The instructions need to be to the point, leaving any chance of an abitrary decision or preference by labeller.

Also, we cannot create a netural class after the fact we have the data labelling.

But, in the case of historical datasets, like prescriptions given by doctors, we would need to get a labeling service involved. In that case, we could ask to validate the data points, confirm it to the labelling instructions and as required generate a Neutral class.

Alternatives and Applications

When labellers disagree

As explained earlier, this ambiguity between two data points arises when human experts disagree. It might seem far simpler to simply discard these data points and move on with binary classification. After all, it doesn't matter what the model does on the neutral cases.

This has two problems:

- False confidence tends to affect the acceptance of the model by human experts since model is essentially trying to learn arbitrary labels.

- If we are training a cascade of models, then downstream models will be extremely sensitive to the neutral class. If we continue to improve this model, downstream models could change dramatically from version to version.

That's why, using a neutral class to capture areas of disagreement allows us to disambiguate the inconsistent labelling.

Customer feedback/satisfaction ratings & sentiment analysis

Often times, the feedback 5 star ratings, it might be helful to bucket the ratings into three categories: 1-3 as bad, 4-5 as good, and 3 as neutral. Sentiment analysis also follows the same requirement, where text blob can identify presence of neutral sentiment.

If instead, we attempt to train a binary classifier for feedback ratings by thresholding at 4, the model will spend too much effort trying to get the neutral data points correct.

Reframing with neutral class

Automated stock trading systems based on ML might seem to be a very simple case of binary classifier, identifying whether or not to purchase a stock based on whether it expects the security to go up or down in price.

Because of stock market volatility and the trading cost associated with the risk of making a bad descision, making such a system is non viable. We'll be unable to but every stock that we predict will go up, and unable to sell stocks that we don't hold.

In such cases, It is helpful to consider what the end goal is.

The end goal here is not to predict whether a stock will go up or down.

Instead, a better strategy might be to buy call options for few stocks that are most likely to go up more than a centain percentage over a period of time, and but put options for few stocks that are most likely to down more than a certain percentage over a period of time.

A little finance education might be needed here. Let me explain,

- Call options is a contract which conveys to its owner, the right, but not the obligation, to buy a stock at a specified strike price on or before a specified date, by paying a premium.

- Put options is a contract which conveys to its owner, the right, but not the obligation, to sell a stock at a specified strike price on or before a specified date, by paying a premium.

Both allows us (a buyer) to bear a limited loss (since we only payed premium, and didn't actually bought or sold a stock) but enjoy an unlimited profit.

Let's take an example, we purchased a Call option for a stock that has a current price of ₹2000, which give us right to buy at a predetermined strike price of ₹2000, predicting the expection that the price of the underlying stock to go up. When the stock price goes up, say ₹3000, we'll exercise our right, and buy the stock at strike price of ₹2000, gaining a notional gain of ₹1000.

And for Put Options, we purchased a Put option for a stock that has a current price of ₹2000, which give us right to sell at a predetermined strike price of ₹2000, predicting the expection that the price of the underlying stock to go down. When the stock price goes down, say ₹500, we'll exercise our right, and sell the stock at strike price of ₹2000, after buying the stock at ₹500, gaining a notional gain of ₹1500.

The solution, thereby is to create a training dataset consisting of three classes:

- Stocks that went up more than a specific persect–call

- Stocks that went down more than a specific percent–put.

- The remaining stocks are in the neutral category.

Rather than a training a regression model which would have predicted which stock will go up/down and probably brought us to footpath, we refram the problem and train a classification model with these three classes and pick the most confident predictions from our model.

That's all for today.

This is Anurag Dhadse, signing off.